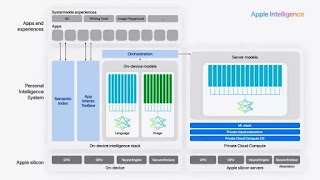

Yesterday at the WWDC event, Apple Intelligence was officially announced and it will be used on the iPhone, iPad, MacBook and Vision Pro to perform artificial intelligence (AI) tasks such as summarizing long documents and generating images using prom. On the second day of WWDC, Apple shared more information about Apple Intelligence. First of all unlike speculation, these models are developed in-house and not OpenAI as Elon Musk feared.

First of all two models are used. A model with 3 billion parameters can be run directly in the device. This model helps to perform image generation (Image Playground, Genmoji and Image Wand), and text summarization in Notes. According to Apple, the performance of this model is better than the Microsoft Phi-3-mini.

The second model is used on the Private Cloud Compute cloud server. There is no information about the number of parameters used in the training review. Apple simply says the in-device model has a 49K vocabulary size while the cloud server model has a 100K vocabulary size. In human-run tests, both Apple-developed models had lower error rates than the Gemma-7B, Gemma-2B, Phi-3-Mini and Mistral-7B

Both models are also trained using open source data and do not use user-owned personal data.